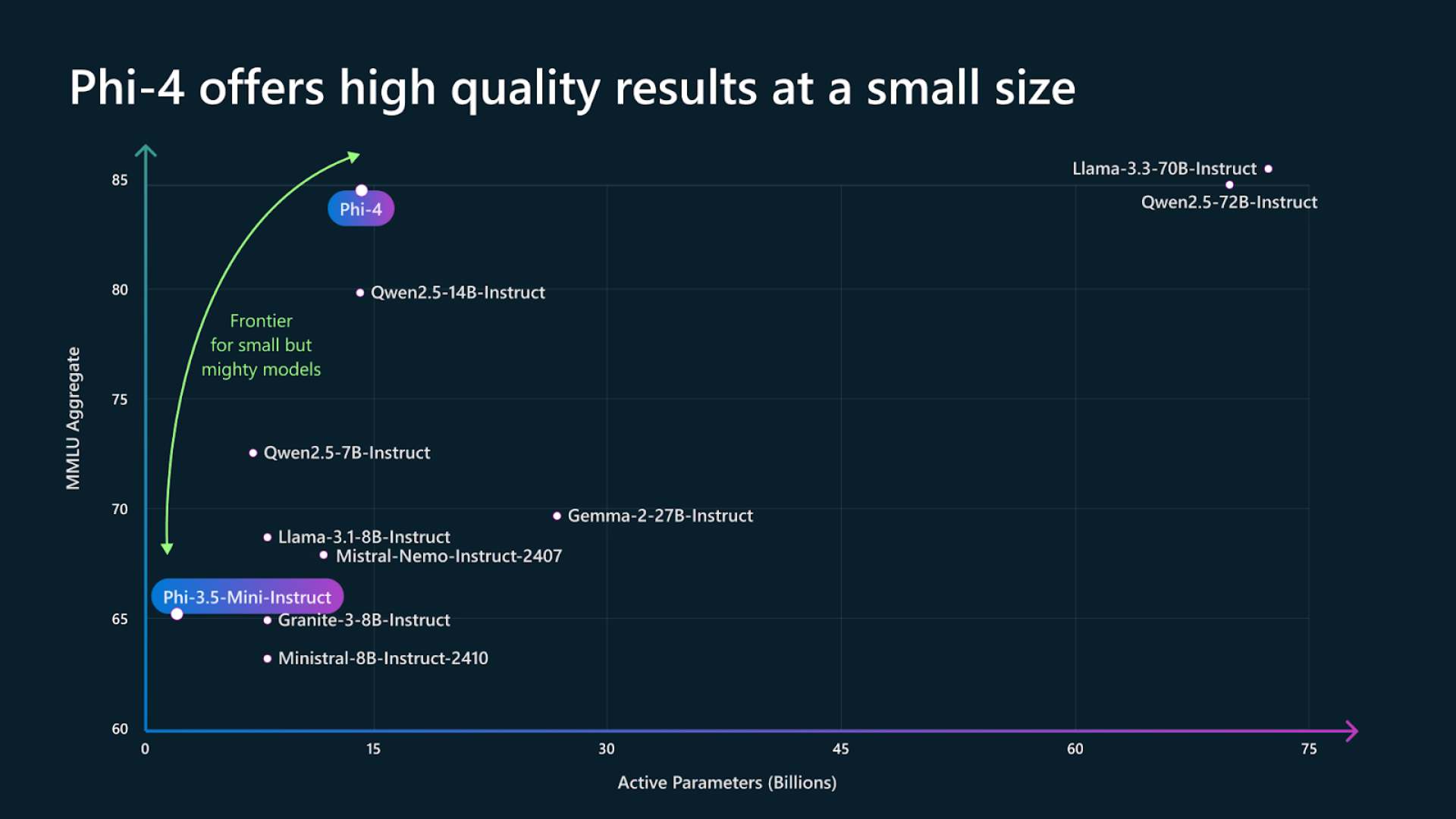

Microsoft launched three new open-weight reasoning models—Phi 4 mini reasoning (3.8 B parameters), Phi 4 reasoning (14 B parameters), and Phi 4 reasoning plus (14 B tuned for reasoning)—that deliver performance on complex math, science, and coding tasks competitive with much larger models like DeepSeek R1 (671 B) and OpenAI’s o3-mini. Available immediately on Hugging Face and Azure AI Foundry, these models extend Microsoft’s Phi small-model family, enabling developers to integrate reasoning AI into edge devices and cloud services alike.

Background and Phi Family Evolution

A year after introducing the first Phi small language models, Microsoft expanded its Phi lineup to include reasoning variants designed to fact-check and verify multi-step solutions. This move responds to growing demand for on-device intelligence in educational, professional, and IoT contexts, where reference-grade accuracy and low-latency inference are critical.

Model Overviews

Phi 4 Mini Reasoning (3.8 B)

-

Training Data: 1 M synthetic math problems generated by DeepSeek’s R1 model.

-

Use Case: Designed for lightweight “embedded tutoring” and real-time educational assistance on resource-constrained hardware.

-

Parameter Count: 3.8 B, striking a balance between footprint and reasoning accuracy.

Phi 4 Reasoning (14 B)

-

Training Data: High-quality web corpus plus curated demonstration traces from OpenAI’s o3-mini.

-

Capabilities: Excels at complex math, science, and code generation tasks, supporting both cloud and edge deployment scenarios.

Phi 4 Reasoning Plus (14 B tuned)

-

Enhancement: Builds on Phi 4 by integrating reasoning-focused fine-tuning, boosting accuracy on benchmarks without increasing parameter count.

-

Benchmark Parity: Matches DeepSeek R1’s performance in internal tests and equals OpenAI’s o3-mini on OmniMath, demonstrating small-model competitiveness with 671 B-parameter systems.

Benchmark Performance

-

OmniMath: Phi 4 reasoning plus and Phi 4 mini reasoning achieve on-par scores with o3-mini, confirming their efficacy on advanced math problem sets.

-

SWE-Bench & Codeforces: Phi 4 reasoning outperforms earlier Phi models and rivals R1 on software-engineering benchmarks, illustrating its coding proficiency.

Availability and Licensing

All three Phi 4 reasoning models are released under a permissive MIT license and accessible on Hugging Face and Azure AI Foundry, ensuring open-weight distribution and integration flexibility. Documentation, technical reports, and example notebooks accompany each model to streamline developer adoption.

Business Impact and Use Cases

-

Educational Technology: On-device tutoring solutions can leverage Phi 4 mini reasoning to provide adaptive math help in regions with limited cloud connectivity.

-

Edge and IoT Applications: Smart factories and healthcare devices gain local reasoning capabilities without constant cloud offload, reducing latency and bandwidth costs.

-

Enterprise Automation: RPA workflows and knowledge-management systems can integrate Phi 4 reasoning for accurate data extraction and decision support.

Expert Perspectives

-

Kyle Wiggers (TechCrunch): “These new reasoning models redefine what’s possible with small, efficient AI,” highlighting their benchmark parity with much larger systems.

-

Microsoft Research: “Phi 4 reasoning expands the horizon for edge AI, enabling fact-checked solutions on lightweight devices,” underscoring the model’s educational promise.

Microsoft’s Phi 4 reasoning series—comprising mini, standard, and plus variants—demonstrates that small-parameter models can rival or exceed the performance of far larger AI systems on complex reasoning benchmarks. By combining open licensing with edge-optimized architectures, these models empower a broad spectrum of applications from education to industrial automation. As enterprises and developers seek to deploy reliable AI closer to users and devices, Phi 4 reasoning offers a scalable path to high-accuracy, low-latency intelligence, reshaping expectations for AI at the edge.