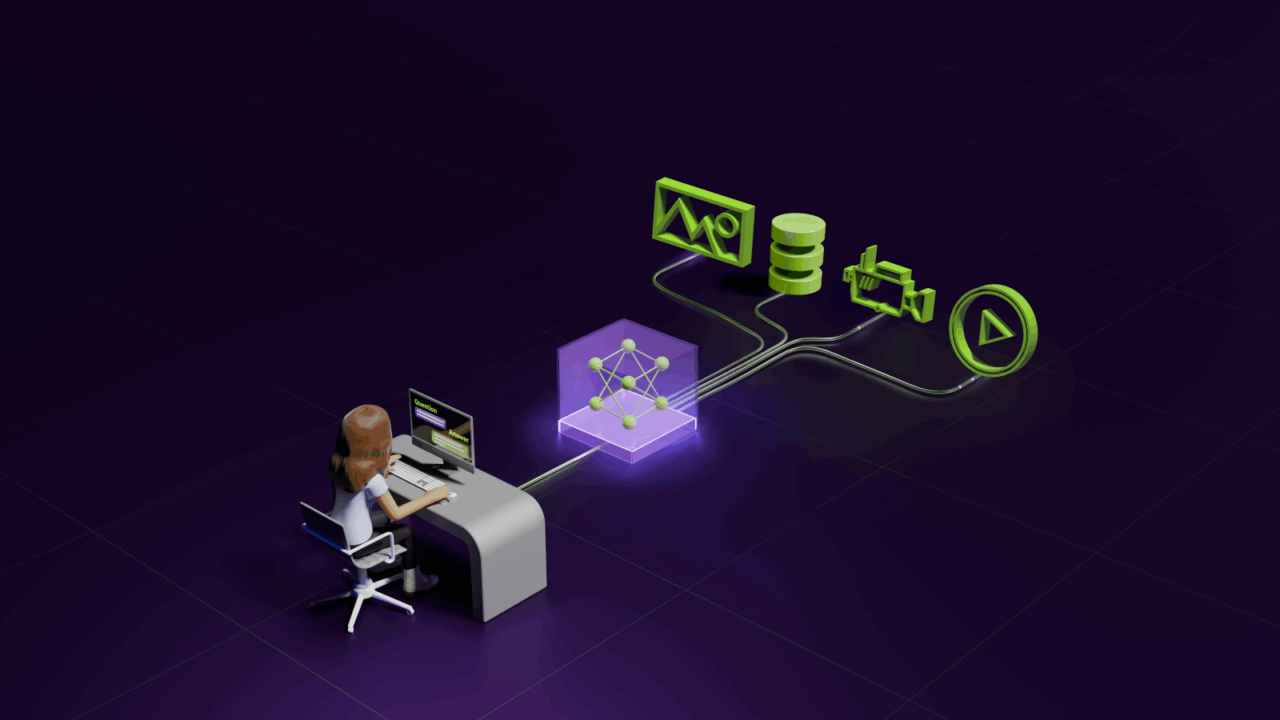

NVIDIA’s VSS Blueprint is a ready-to-deploy pipeline that turns live or archived video into searchable, summarizable text and interactive Q&A agents—up to 100× faster than manual review. It combines vision–language models (VILA), large language models (Llama Nemotron), automatic speech recognition (Parakeet ASR), and retrieval-augmented generation (RAG) via NVIDIA NIM microservices. Supported on GPUs from edge devices (RTX 6000 PRO) to data-center clusters (A100/H100, DGX Spark), VSS serves industries from manufacturing training to smart-city monitoring and security compliance.

What Is the VSS Blueprint?

The VSS Blueprint is part of NVIDIA AI Enterprise and Metropolis, providing reference workflows and microservices to build video-analytics AI agents.

It ingests video streams, extracts frames and audio, generates captions, transcribes speech, and indexes all metadata for fast retrieval and summarization.

Developers interact with VSS via simple REST APIs—/search, /summarize, and /query—making integration into existing apps or dashboards straightforward.

How It Works

-

Ingestion & Chunking: A stream handler splits live or stored videos into chunks for parallel processing.

-

Computer Vision & Captioning: Vision–language models (VILA) analyze frames to detect objects, actions, and scenes, generating detailed captions.

-

Audio Transcription: Parakeet ASR converts spoken content into text, adding context for multimodal understanding.

-

RAG Pipeline: Embeddings of captions and transcripts are stored in a vector database; a Llama Nemotron LLM retrieves relevant chunks and synthesizes natural-language answers.

Key Benefits

-

Speed: Summarize an hour of 4K video in under one minute—over 100× faster than real time.

-

Scalability: Process hundreds of concurrent streams on multi-GPU clusters or burst-mode archives on single GPUs.

-

Flexibility: Deploy on-premises, at the edge (RTX 6000 PRO), or in the cloud (NVIDIA AI Enterprise) without code changes.

-

Multimodal Insights: Fuse visual, textual, and audio data for richer situational awareness and interactive Q&A.

Industry Use Cases

-

Manufacturing Training: Pegatron uses VSS to summarize assembly-line videos, cutting onboarding time by 50% and reducing defect rates.

-

Smart-City Monitoring: City planners index traffic feeds, summarize congestion trends, and trigger accident alerts—improving response times by 30%.

-

Security & Compliance: Financial firms retrieve CCTV events instantly for audits, accelerating investigations and enhancing oversight.

-

Retail Analytics: Store managers query shopper patterns (“Which aisles were busiest at noon?”) and get concise summaries to optimize staffing.

Deployment & Integration

-

Hardware: Supports A100, H100, A6000, L40(L40S), H200 GPUs for both pilot and scale.

-

Software: Delivered as Docker containers and Helm charts, with full source on GitHub for customization.

-

APIs: Simple REST endpoints enable rapid prototyping and embedding into BI tools, mobile apps, or custom UIs.